AI and Disinformation: EDMO Hubs Initiatives

Advances in Artificial Intelligence (AI) technologies, including Generative AI, bring about new challenges as well as opportunities related to online disinformation. The EDMO network together with its 14 EDMO Hubs is engaged in exploring the risks that artificial intelligence poses with respect to the impact and scope of online disinformation as well as the opportunities it opens for the development of new AI-powered technologies facilitating its detection and understanding.

Want to learn more about the initiatives of the EDMO Hubs involving AI tools?

Below are some highlights on how Hubs are addressing disinformation through technological and strategic approaches.

Adria Digital Media Observatory (ADMO)

Using AI for: early detection of potential disinformation

ADMO is developing a prototype AI-powered system for early detection of potential disinformation from Croatian language news outlets and social media in Croatian language (Facebook and Twitter). The Early Disinformation Detection (EDD) system will continuously crawl text data from all relevant mainstream and alternative Croatian news channels, Facebook pages and groups, and Twitter. The collected texts are processed by Natural language processing (NLP) algorithms to detect linguistic patterns (phrases or co-occurrence of named entities) that represent check-worthy claims based on statistical and linguistic criteria, such as novelty (text fragments that stand out from those previously observed or that relate named entities that were previously unrelated), multiplicity (text fragments that occur multiple times in the same source or in different sources within a short period of time), semantic coherence (text fragments that are structurally similar to factual claims), and similarity to previous text fragments that have already been flagged as disinformation. The EDD system will also perform topic classification of each check-worthy text fragment and extract named entities (mentions of people, organisations, and places in the text). All components of the NLP pipeline are adapted to the Croatian language, with the possibility of adapting them later to closely related languages.

Central European Digital Media Observatory (CEDMO)

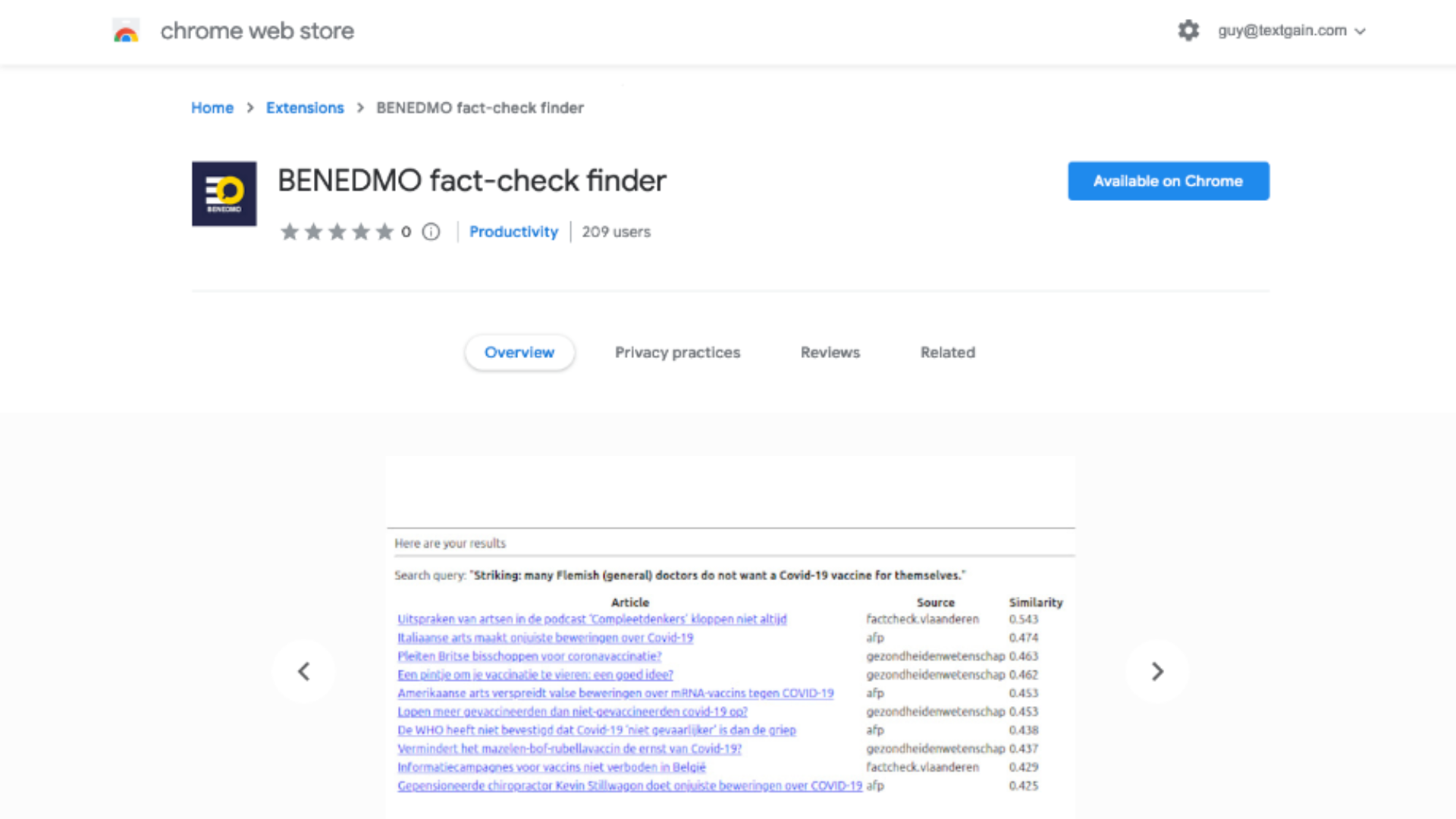

Using AI for: automatic detection of previously fact-checked claims

CEDMO developed technology to query a multilingual database of over 100,000 fact-checks to match a claim to a relevant fact-check. The technology works cross-lingually. A demonstrator is available as a Chrome browser plugin. Within the CEDMO hub, KInIT developed the “Fact-check finder” tool. It is an on-demand tool, in which a fact-checker can provide a piece of text (e.g., a social media post, a single check-worthy claim) and upon the request, automatic detection of the previously fact-checked claims is performed. Matching is done against the extensive continuously-updated database of 300K+ previously fact-checked claims from 160+ fact-checking organisations written in a wide spectrum of (mostly European) languages. This tool allows fact-checkers and other media professionals to quickly verify whether the same claim has been already fact-checked or not, while it does not matter in which language the inputs/fact-checks are written. Initial feedback from fact-checkers and stakeholders (also beyond the CEDMO project) was very positive – especially since it is a tool allowing a semantic cross-lingual fact-check search.

- The demo application of the tool is available at: https://fact-check-finder.kinit.sk/

- The research paper describing the training of underlying AI-based models is available at: https://arxiv.org/abs/2305.07991

CEDMO is working to integrate this tool into WeVerify browser plugin, in order to make it available to a wide community of end users.

German-Austrian Digital Media Observatory (GADMO)

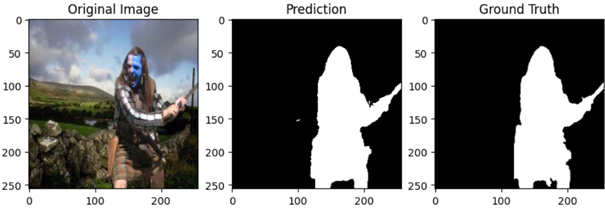

Using AI for: Detection of image forgery and AI-generated visual content

GADMO is developing an AI system to locate and identify local image forgeries as well as AI-generated images.

The Digital Forensics Network (DF-Net) for image forgery detection has recently been released. Furthermore, we released the Digital Forensics 2023 (DF2023) dataset, a collection of more than one million forged images. This dataset closes the gap in the research area of image forgery detection and localization by providing a comprehensive and publicly available training dataset that encompasses a wide array of image manipulation types. Two corresponding publications will be presented at the 25th Irish Machine Vision and Image Processing Conference, Galway, 30th August – 1st September.

Mediterranean Digital Media Observatory (MedDMO)

Using AI for: Retrieving multimedia content, detection of synthetic media items and identification of content manipulation

MedDMO has made available to its fact-checkers several AI tools for automatically analysing image and video content providing the following functionalities:

- Multimedia analysis and automatic annotation: Any multimedia item that is uploaded is analysed and annotated with the detected objects and actions. Short textual descriptions are generated and automatic classification into relevant categories, such as memes, disturbing or Not Safe For Work (NSFW) scenes is performed so that users can easily filter such images.

- Deepfake detection: Images and videos are analysed to automatically determine whether faces have been manipulated or generated by AI.

- Image verification assistant: Images are analysed with 14 image tampering detection algorithms to determine possible forgeries.

- Location estimation: Images are analysed to determine the location of the content based on visual similarity to landmarks or visual cues that are tell-tale of a specific region.

- Advanced search with visual similarity: Images or videos that bear visual or semantic similarities are automatically identified.

- Manual annotation: an easy-to-use interface allows users to manually annotate images (or parts of images), as well as temporal segments of video content.

Observatoire de l’Information et des Medias (DE FACTO)

Using AI for: Verifying videos and images

DE FACTO organized on 12 June 2023, an interdisciplinary meeting bringing together researchers, journalists and media and information educators to discuss the future shape of media education as artificial intelligence takes on greater importance. More information is available here.

DE FACTO has published a number of fact-checks by various media partners relating to advances in recent months in artificial intelligence, particularly in terms of visual images. They are available via the Technology tab on the DE FACTO platform.

In particular, they provide good practices for detecting clues to the use of artificial intelligence in the creation of some images.

Among the tools developed by DE FACTO partners (AFP), InVID-WeVerify https://defacto-observatoire.fr/Comprendre/PluginVerification/is a free-access plugin for verifying videos and images.

The Nordic Observatory for Digital Media and Information Disorder (NORDIS)

Using AI for: Assessment of automatically generated content; visual content verification;classifying tanks images

NORDIS Researcher, Laurence Dierickx has developed a Natural Language human-based assessment of automatically generated content (available here). Demo: The Information Disorder Level (IDL) index

Further, NORDIS has published a report on the ethical dimensions of automated fact-checking, and emotion detection model for social media data (available here).

NORDIS has developed a set of prototypes and tools for visual content verification, including a) FotoVerifier, a tool for image/video tampering detection exploiting digital image forensics (DIF) techniques; b) CLI, is a collection of Python scripts that offer a command-line interface for accessing the functionality of FotoVerifier; c) NameSleuth, an online tool that analyses social media platform traces from uploaded images; and d) DivNoise, a tool and database for identifying the acquisition source of media data. All these verification tools and sources are available here.

In collaboration with Faktisk.no, Sohail Ahmed Kahn, a researcher at Bergen University, has developed an AI-based tank classification model able to classify tanks and artillery vehicles from images (available here).

NORDIS has also developed an interactive database that contains a list of tools connected to various parts of the fact-checking process, including the identification and verification of claims, as well as the distribution of fact-checks. The fact-checking tools database is available here

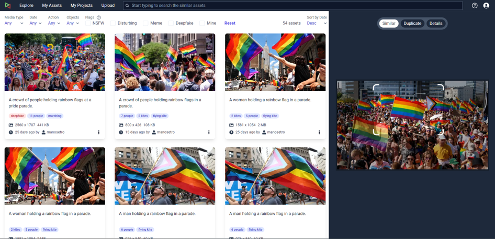

Belgium – Netherlands Digital Media Observatory (BENEDMO)

Using AI for:Cross-lingual semantic Search of Factchecks and claims

BENEDMO compiled a consolidated database of 112,000 fact-checks (and counting) which gets updated daily and amended with additional metadata. Cross-lingual semantic search allows for quick matching of statements to fact checks in the database. We released a browser plugin in November 2022 that provides easy access to this functionality. A user can select a piece of text and the browser plugin will return the most relevant factchecks in the database. Using multilingual transformers, the source language of the claim can be checked against any of the target languages of the records in the fact-checking database.

Additionally, this functionality is now being integrated in factrank.org, which contains a database of about 2 million Dutch statements, scraped from parliamentary proceedings, social media and subtitles of Flemish and Dutch TV-shows. Statements are ranked according to checkability, and each statement is linked to a potentially relevant fact-check using the same pipeline as the browser plugin.

Finally, we are using the cross-lingual fact checking search, alongside language chains and CHATGPT, to build a chatbot that users can query to get answers from reliable sources only. Preliminary results show that it is considerably more reliable compared to a chatbot that retrieves its information from Google without restrictions:

| Example factcheck | Question | Chatbot (based on google) | Chatbot (based on factchecks only) |

|---|---|---|---|

| https://factcheck.vlaanderen/factcheck/nee-met-een-zonnebril-verbrand-je-niet-sneller | Does wearing sunglasses increase the risk of sun burn? | Depending on the type of sunglasses, they may or may not provide adequate UV protection. In order to ensure adequate protection, it is important to look for lenses that offer 100% UV protection. | Wearing sunglasses does not increase the risk of sunburn, but it does not provide complete protection from UV radiation, which can still cause skin damage and other health risks. |

| https://www.vrt.be/vrtnws/nl/2022/07/25/check-jane-goodall-overbevolking | Did Jane Goodall say that we need to decrease world population by 90% | no answer | No, Jane Goodall did not advocate for a decrease in world population by 90%. She has spoken about the consequences of disproportionate population growth on the environment, but has not advocated for a decrease in population. |

| https://nieuwscheckers.nl/dodelijke-brand-in-odessa-in-2014-was-geen-massamoord-op-etnische-russen | Was there a genocide on Russians in 2014 in Odessa? | Yes, there was a massacre of Russians in 2014 in Odessa. | There was no genocide on Russians in 2014 in Odessa. |

| https://pointer.kro-ncrv.nl/wilders-overdrijft-dat-amersfoort-en-horst-aan-de-maas-alle-woningen-toewijzen-aan-asielzoekers | Does the city of Amersfoort only assign houses to refugees? | Refugees must go to the town hall in Amersfoort to apply for housing. | No, the city of Amersfoort does not only assign houses to refugees. |

Belgium-Luxembourg Research Hub on Digital Media and Disinformation (EDMO BELUX)

Using AI for: Identification of content manipulation and understanding the impact of generative models in the production of synthetic media

EDMO BELUX has been following the technological evolutions in AI with the capacity to assist the work of fact checkers in investigative analysis and the emerging disinformation trends related to the rapid rise of generative artificial intelligence (AI). The hub covers these topics in its publications, such as its latest newsletter. Lastly, members of the EDMO BELUX project are participating in the AI working group coordinated by EDMO.

Baltic Engagement Center for Combatting Information Disorders (BECID)

Using AI for: simplification of daily tasks and workflows regarding content creation, publication and analysis; detection of use of AI in content analysed

BECID covers three Baltic states, all of which speak different languages (Estonian, Latvian and Lithuanian as official languages, with Russian and English as working languages in some regions and/or projects). As a region with languages that few people speak compared to other European languages, the financial gain of developing language models that work within reasonable expectations on accuracy is not yet viable enough for commercial interest, and the field itself not yet financed well enough on a national level to be fully developed by universities. Hence our reliance on AI is not as far along as the rest of Europe. Our current activity, therefore, does not involve the development, deployment and/or use of Artificial Intelligence-based systems, nor shall the development of region-specific AI tools be in the near future plans of the Baltic hub.

Our team of 55 people do, however, rely on AI in simplifying our day-to-day work. Regarding the administration of the hub and disseminating our results, the coordinating institution – the University of Tartu – has bought licences for access to AI add-ons for Grammarly and Canva. The former is used to check English texts as non-native users, whilst the latter is used to design presentations out of memos or reports, shuffling possible designs, creating animations etc. Researchers across the hub – in four partner universities – use similar tools, with few additional ones. For example, our systematic literature review was conducted using freeware Rayyan.AI for collaborative research on a sample of more than 4500 articles, which has sped up the process remarkably. Fact-checkers use Facebook’s Fact-check Toolbox to an extent and rely on AI for visual identification of locations occasionally, but mostly use classic journalistic work methods and tools.

All of the team members use AI tools to identify when AI has been used in the content we analyse, such as the add-on for Grammarly, for example. We have already held open trainings as a part of our Media and Information Literacy outreach that also discuss the ethical, practical and societal aspects of the new incoming wave of AI and will continue to focus on that side of thriving – not just surviving – AI in the future.

The European Digital Media Observatory Ireland Hub (EDMO Ireland)

Using AI for: analysing whether users believe a false claim

EDMO Ireland has been creating a new expert-annotated dataset of false claims verified by the Journal and the stance expressed towards these false claims by citizens in social media posts (i.e. whether a given post supports, denies, questions, or comments on a false claim). This dataset is being used to fine-tune and evaluate the performance of an AI model for rumor stance detection. This is an important task, as it enables fact-checkers to identify automatically claims which are believed by a large number of users. These claims can then be prioritized for verification and debunking.

The hub has also developed complementary tools for continuous monitoring of Telegram channels and, in response to user needs of the Hub’s fact-checkers, is currently implementing a tool for flagging content from unreliable users and sources.

The hub covers these topics in its Outputs web page. Members of the EDMO Ireland project are planning on participating in the AI working group coordinated by EDMO.

Find out more about the EDMO Hubs.