If Zuckerberg’s dismantling of the Third Party Fact-checking program reaches the EU, what kind of false stories could be allowed to circulate unchecked by professionals?

Organizations that contributed to this investigation: AFP, Correctiv, Demagog.cz, Demagog.pl, Ellinika Hoaxes, Facta, Maldita, Newtral, Polígrafo, Science Feedback, The Journal FactCheck

Enzo Panizio and Tommaso Canetta, the authors of the article, work for Facta, an organization that is part of Meta’s Third Party Fact-checking Program, and a member of the International Fact-Checking Network (IFCN) and of the European Fact-Checking Standards Network (EFCSN)

Mark Zuckerberg’s recent decision to dismantle Meta’s Third Party Fact-checking Program (3PFC) in the United States has ignited a wave of criticism and concern, especially as the CEO framed fact-checkers’ work as “censorship” and “politically biased”. Fact-checking networks (like the European Fact-Checking Standard Network and International Fact-Checking Network), the European Digital Media Observatory and independent experts argue that this rhetoric not only misrepresents the role of fact-checkers but also exposes the deeply political motivations behind Meta’s move.

The announcement has raised alarms among journalists, independent observers, and institutions, who see it as a retreat from Meta’s commitment to curbing disinformation. The EFCSN condemned Zuckerberg’s statements, calling them “false and malicious” and warning of the implications of weakening fact-checking systems at a time when election integrity and public trust in information are under increasing threat. The same fact-checking organizations that are part of the Third-Party Fact-checking program explained why it is wrong and “dangerous” to compare it to “censorship” and why the accusation against fact-checkers of being politically biased is baseless.

Meta can remove content, fact-checkers can’t

The Third-Party Fact-checking program (3PFC) works so that when a specific piece of information is debunked through a professional fact-checking analysis that provides evidence of its partial or total falsity, a label is applied to the content on social media, warning users that the claims it contains could be false, according to the work of independent fact-checking organizations. Of course, the labeling of information as false or misleading is only possible for claims and content which can be demonstrated as false through publicly available evidence. In fact, fact-checking labels only provide users with contextual information, not having the power to remove content, which is a prerogative jealously detained by platform owners, who have total control over what to show and what to hide on users’ feeds.

Moreover, Meta’s own data has previously highlighted the success of its fact-checking initiative. According to those data, labels applied to fact-checked posts have been shown to reduce the spread of misinformation significantly, with millions of users choosing not to engage with flagged content. “Between July and December 2023, for example, over 68 million pieces of content viewed in the EU on Facebook and Instagram had fact-checking labels. When a fact-checked label is placed on a post, 95% of people don’t click through to view it”, stated Meta in a communication dated early 2024 referring to its EU market and products. Such a relevant amount of totally or partially false information could flood, without any warnings, the social media feeds, in case the 3PFC is ended also in the European market and equally effective systems are not put in place.

Fact-checkers’ political bias reduced as much as possible

Zuckerberg justified the program’s removal also by alleging that fact-checkers had become “too politically biased”, a claim widely dismissed by experts as unfounded and harmful. The fact-checking organizations that can participate in Meta’s Third-Party Fact-checking program must be members of the International Fact-Checking Network (IFCN) or, in Europe, of the European Fact-Checking Standards Network (EFCSN). IFCN and EFCSN set very high standards of political independence and impartiality and strict rules, whose fulfillment by fact-checking organizations is verified through a strict procedure of assessment carried out by independent experts. For example, according to the EFCSN Code, member organizations must not “endorse or advise the public to vote for any political parties or candidates for public office”, or “conclude any agreement or partnership with a political party”, or “focus investigations unduly on one particular political party or side of the political spectrum”, or again “employ anyone who holds a salaried and/or prominent position in a political party”. Moreover, all fact-checking articles must undergo “at least one round of editing by someone other than the author before publication”. And so on. All these standards are set up to guarantee a high degree of political independence.

What can happen

So, what would be the impact of the end of the 3PFC in the EU, if Zuckerberg pursued his plan to stop the initiative worldwide? Concretely, what kind of false stories will be allowed to spread through social media feeds (when not directly promoted by the algorithms) without any contextual information provided by professional and recognized fact-checking organizations proving their falsehoods? In this article, we examine and explain a selection of ten disinformation stories that Meta’s European fact-checking program helped mitigate, involving internal crises, elections, climate disasters, pandemics, racist sentiment and various conspiracies. Then we explain why relying exclusively on Community Notes could be problematic, particularly if the disinformation content is about polarizing issues.

10 – Mammograms, “the worst organized crime against women”, and other conspiracies

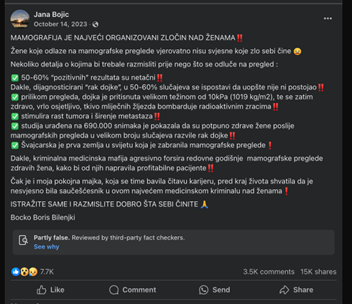

In November 2023, an alarming post gained traction on Facebook in Croatia, claiming that mammograms were part of “the worst organized crime against women”. The post listed numerous misleading and false statements, including the assertion that mammograms are designed to intentionally harm women rather than detect breast cancer. Fact-checkers from the Croatian Bureau of AFP debunked this narrative, pointing out that no credible scientific evidence supports the claim that mammograms are unsafe or ineffective. On the contrary, peer-reviewed research demonstrates that mammograms remain one of the most effective tools for early cancer detection, saving lives each year.

Similar debunked claims have also questioned self-tests to detect cervical cancer but also promoted supposed cancer cures, like Invermectine or Fenbendazole, despite their effectiveness on human cancer lacks scientific evidence.

As can be seen in Image 1, the content wasn’t “censored” nor banned in any way, actually reaching a vast audience: thousands of likes, views, and shares. However, since independent fact-checking organizations debunked some claims contained in it, the post included a label at the end, where more accurate information was available for those who wanted to better understand the topic. Clicking on the label, users can still access the various articles that proved the claims about the alleged dangerousness of mammography to be “partly false”, as stated by the label itself.

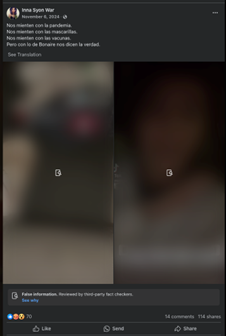

Even when a specific claim is instead entirely fabricated or with no connection to reality, like it often happens with conspiracy theories, Meta’s system does not remove the post. It reduces its visibility by obscuring the content. For example, after the DANA floods in Spain in November 2024, a video falsely claimed that refrigerated trucks were secretly transporting bodies from a flooded shopping center, as part of a government cover-up of the real entity of the tragedy.

As can be seen in Image 3, a warning appears before users can click through, making clear that the content has been fact-checked and found to be fabricated. However, users can still choose to access it and read it anyway, in two clicks, if they wish.

9 – Exploiting Compassion: the scam of a “3-year-old girl with cancer”

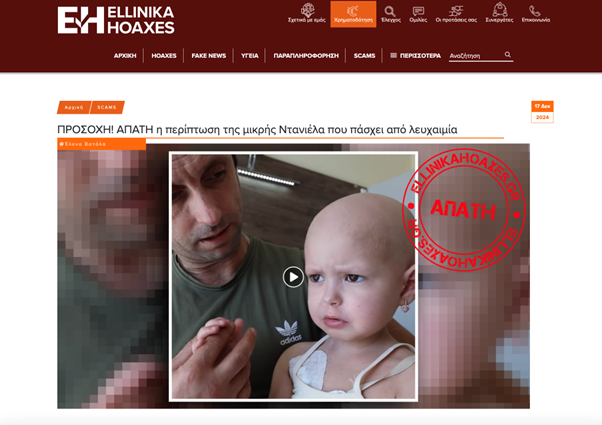

In December 2024, a financial scam spread on Facebook through sponsored ads, targeting Greek users. The campaign falsely claimed to support a 3-year-old girl named Daniela, described as a Greek child battling a rare form of cancer. In reality, the story was a fabrication, and the child in question is from Ukraine and unrelated to the fraudulent fundraiser.

The group behind the scam, linked to the organization Chance Letikva, misappropriated the child’s identity to solicit donations. By the time fact-checkers from Ellinika Hoaxes exposed the scheme, over €40,000 had been collected from more than 1,300 unsuspecting donors. Investigations revealed that this organization has orchestrated similar scams globally, exploiting the identities of real individuals to manipulate emotions for financial gain.

Following the publication of the fact-checking article by Hellinika Hoaxes, the Facebook profile spreading the scam was deactivated, reducing the exposure of unaware users to a scam designed to steal their money. As we will see in various cases, disinformation often has victims.

8 – Fueling social unrest

In January 2025, a viral video claimed to show an anti-terror demonstration in Magdeburg, Germany, where a deadly attack at a Christmas market took place in December. The footage depicted a large group of black-dressed people chanting and marching, fueling speculation about the rise of grassroot protests against terrorism in the region. However, Correctiv explained that the video had nothing to do with anti-terror activism. Instead, it captured football fans in Dortmund celebrating their team — a context entirely unrelated to the claims made online.

Despite protests actually taking place, the attempt was to exploit the effective and out-of-context video to upscale the social unrest in a delicate situation. The misleading story was amplified on social media, where users shared it thousands of times, often attaching politically charged commentary about security and immigration in Germany.

7 – Political misinformation: fake endorsements and manipulations in electoral contexts

During political campaigns, disinformation targeting institutions or political figures always tries to do its part. In Spain, a claim spread on Instagram in April 2024 suggesting that Jordi Évole, a well-known journalist, had been hired by ERC (Esquerra Republicana de Catalunya) to campaign for the party in the Catalan elections. Fact-checkers at Maldita debunked this claim, revealing it as a fabricated story designed to undermine the journalist’s credibility (by emulating the semblance of a trusted newspaper) and associate him with partisan interests. The narrative, amplified on social media, fueled divisive commentary and further polarized the political discourse, inciting mistrust in recognized sources of reliable information.

Similarly, in Germany, false claims circulated in January 2025 alleging that President Frank-Walter Steinmeier had threatened to annul the Bundestag elections if the “wrong party” won. Fact-checkers from Correctiv disproved this baseless assertion, which was entirely fabricated to erode trust in democratic institutions and spark outrage among voters.

6 – The DANA “deep state” conspiracy

In Spain, in 2024, during the aftermath of severe flooding caused by the extreme meteorological event called DANA, amid the chaos due to the emergency itself and several disinformation narratives, a video began circulating on Facebook, featuring a man dressed in military attire who claimed to be a sergeant revealing a “deep-state” conspiracy behind the flooding. The video, which gained significant traction on Meta platforms before being debunked, suggested that Dana was artificially induced as part of a hidden political agenda, fueling fears of government control over natural disasters.

However, Newtral debunked the claim, proving that the so-called sergeant was not a member of any military force. Instead, he was a civilian wearing a costume to lend credibility to his baseless allegations.

5 – Climate change-related disinformation in general

The Dana case mentioned before is not only just one of the several cases that interested that specific climate disaster. It is also one of the significant amount of false content denying or minimizing the impact of climate change and of human actions on it, but often also its own existence. Among the cases that fact-checking organizations part of the EDMO network recently helped expose on Meta platforms are:

- Livestock dairy products – A false claim spread in Poland suggested that the dairy company Mlekovita was adding the methane-reducing feed additive Bovaer to its products, misleadingly portraying it as a harmful ingredient, implying that the substance could have dangerous effects on human health. pl debunked this, explaining that Bovaer is not used in food products but is a feed supplement for reducing livestock emissions tested and approved by the European Food Safety Authority. Scientific studies confirm that when used as intended in animal feed, Bovaer is safe and does not affect the milk or meat produced by the animals.

- Wildfires and climate change denial – Viral social media posts repeatedly claim that wildfires, such as those in California, Greece, and Canada, are unrelated to climate change. A recent example targeted the Los Angeles wildfires, falsely asserting they were caused solely by local factors (or even alleged human plans) and dismissing climate change as a contributing factor. Science Feedback debunked the demonstrably false information, and provided additional context, highlighting scientific evidence that rising temperatures and drought conditions significantly increase wildfire risks. Again, only a label was put at the end of the specific Facebook post, which anyhow reached thousands of views, shares, and likes.

- Antarctic ice is increasing, not decreasing – In December 2024,a Facebook post, also shared on other platforms, claimed that Antarctic ice is growing, contradicting climate change predictions. Facta debunked this assertion, demonstrating that while seasonal fluctuations exist, long-term data show a clear trend of ice loss due to rising global temperatures.

4 – Misinterpretation of a Hellenic Police report led to online hatred against the Romani community

In late August and early September 2024, a claim spread widely on social media stating that 86% of thefts and burglaries in Greece in 2022 had been committed by Romani individuals. The narrative gained traction, trending on various social platforms, and being reported in mainstream media and websites. However, the claim was based on a misinterpretation of a Hellenic Police report.

The report did not say that 86% of all thefts and burglaries were committed by Romani individuals. Instead, the percentage referred specifically to organized crime groups involved in theft and burglary—not general crime statistics. In reality, organized crime groups accounted for only 4% of all thefts and burglaries, making the claim highly misleading.

Ellinika Hoaxes debunked and corrected the claim, and fact-checking labels were applied to posts spreading the false information on both Facebook and Threads. These interventions helped prevent further amplification of the misleading statistic, which had already contributed to online hostility and discrimination against the Romani community, according to the Greek fact-checkers.

3 – Pandemic-related disinformation

As easily imaginable, a huge effort by the European fact-checking community has been contrasting false information related to COVID-19, which still persists in the European infosphere years after the pandemic. Among the many false stories on the issue, some have been addressed through the 3PFC on Meta platforms. One of the most persistent false claims alleged that EU and US health databases proved thousands had died from COVID-19 vaccines. The Journal debunked this narrative, explaining that these databases collect unverified reports and do not establish causality. Similarly, a drug called Ivermectin was promoted as an alternative to vaccines, despite scientific evidence proving it ineffective in preventing or treating COVID-19.

Disinformation also targeted PCR tests, with viral claims falsely attributed to the test’s inventor, suggesting they were unreliable in detecting COVID-19. Another dangerous false narrative promoted chlorine dioxide, a substance commonly used as bleach, as a miracle cure, disregarding its severe health risks. Beyond health misinformation, COVID-19 became a backdrop for broader disinformation narratives. Some posts falsely suggested that COVID-19 vaccines were part of a deliberate effort to induce demographic decline by causing mass infertility. These are among the clearest examples of how disinformation can harm and, in severe cases, kill people.

Fact-checkers then, thanks to the Meta’s program, were able to alert the users about content being false, warning them not to trust what was spread on the platform and providing the most reliable information possible, so that they can inform themselves. During events that take a heavy emotional toll, it is normal and human to believe in miraculous cures, but there scams and worse linger. A label and links to factual information, as Meta itself has shown in its reports in the last years, can help a lot in these situations.

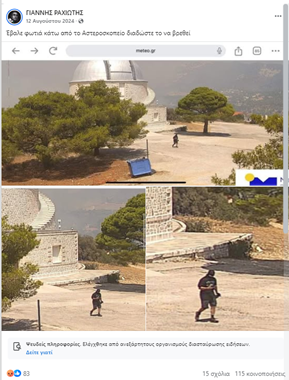

2 – Targeting an innocent volunteer for causing a huge wildfire

During the devastating Attica fires in August 2024, a photograph of a man near the Penteli Observatory threatened by flames went viral, with false accusations that he was the arsonist responsible for starting the blaze. The photo spread rapidly across social media, with calls to identify and apprehend him: “He set a fire under the Observatory, spread the word so he can be found”, for example, urged some users. The situation further escalated when Kyriakos Velopoulos, leader of the conservative parliamentary party Greek Solution, shared the image on his X account, adding political weight to the accusations.

In reality, the man was a volunteer actively helping with fire suppression efforts, as Ellinika Hoaxes pointed out. Mainstream media also supported the correction, but the damage was already done. The false narrative turned him into a scapegoat, subjecting him to public scrutiny, harassment, and fear for his safety, and showing how quickly misinformation can spiral out of control and also target individuals. Fact-checking labels were eventually applied to posts spreading the false claim on Facebook, curbing the spread of this damaging misinformation.

1 – Edited videos, deepfakes and elections

In two very significant cases, AI tools and video editing were deployed during crucial electoral moments. In Slovakia, two days before the 2023 parliamentary elections, an audio deepfake targeting Michal Šimečka, the leader of the Progressive Slovakia party, began circulating on social media. The recording was edited to make it seem as if Šimečka was discussing plans for election fraud with a well-known local journalist, a faked recording trying to delegitimize him and the vote itself a few hours before the election day. The fake audio spread rapidly across Meta platforms, reshared by dozens of posts accumulating thousands of shares. AFP Fakty analyzed the clip, proving it was an artificial manipulation with no basis in reality, and this gave more context to users exposed to its dissemination on Facebook.

In November 2024, after the first round of the Romanian presidential elections – then annulled for being influenced by disinformation campaigns and foreign interferences – a manipulated video targeting Elena Lasconi, the pro-European candidate from the USR (Uniunea Salvați România), began circulating widely on social media, amassing over 80,000 shares. The video falsely depicted Lasconi admitting that she had no idea how to handle a war situation, a statement that, if true, would have severely damaged her credibility on national security matters.

However, fact-checkers at the AFP Romanian bureau analyzed the video and proved that it had been edited to distort her real words. In the original interview, Lasconi did not express ignorance about war scenarios but rather dismissed the idea of an imminent conflict, while outlining her approach to coordinating with NATO and key allies in the event of a crisis. The altered version stripped away this context, making it seem as if she lacked the competence to lead Romania during a potential military emergency to influence undecided voters through fear-based disinformation.

The intervention of the fact-checking article within the 3PFC helped mitigate the impact of such an unjustly damaging false message, which has nevertheless received thousands of shares, being Facebook one of the most used social media apps in the country.

Without 3PFC

All the cases mentioned above were not spread only on Meta platforms nor 3PFC was always sufficient to limit their reach, as the current media ecosystem involves multiple channels of information, both traditional and new. It can be said for sure that, if Meta ends the 3PFC, similar falsehoods will be allowed to circulate without any label with information on sensitive topics provided by professional fact-checkers. The Community Notes model, as it will be better explained later, are likely to fail the task to promptly and effectively tackle disinformation about polarizing issues. This could have serious implications for public perception, leading to increased exposure to false news, whether it is an individual pretending to be a military officer with knowledge of an ongoing conspiracy, or an AI deepfake audio purporting to be a real conversation about public figures discussing rigged elections.

While not perfect and surely improvable, there is evidence that the 3PFC has been effective in countering disinformation in various situations. For instance, cross-platform analyses carried out by Maldita.es found that Facebook’s fact-checking system provided a higher rate of visible actions on disinformation content, outperforming similar efforts on other social media networks. A 2024 Harvard study on fact-checking interventions found that journalistic fact-checking labels have a tangible impact on how people perceive online information in the US.

While skepticism toward fact-checking exists, the study concluded that providing clear, transparent corrections can significantly reduce belief in false claims – especially when they come from independent and reputable organizations. On the other hand, other studies found that fact-checking labels are less effective in discouraging the spread of misinformation than account suspensions or bans, but how and if disinformation content and spreaders are penalized or removed remains an exclusive prerogative of social media platforms.

Crowdsourced fact-checking alone is not enough

Meta has justified the removal of its Third Party Fact-Checking Program by promoting crowdsourced fact-checking as an alternative, arguing that a user-driven system can help provide context to misleading claims. Zuckerberg mentioned a system similar to Community Notes, the model adopted by X, the platform owned by Elon Musk, which only relies on the voluntary contribution of users. However, multiple studies and journalistic investigations (as well as fact-checking organizations) have raised concerns about its effectiveness, particularly when dealing with rapidly spreading disinformation, such as the already mentioned DANA disaster or a stabbing in the UK, which has been exploited by far-right activists on X to fuel riots in the real world.

Unlike professional fact-checking, Community Notes relies on crowdsourced contributions, requiring consensus among diverse contributors before a note appears. This process often delays responses to falsehoods, allowing disinformation to spread before corrections reach users – if they do at all, since consensus on the polarized social media environment is not always possible on divisive issues. The weakest point is that facts are not a matter of political views. At the same time, the crowdsourced model could be more effective against scams, dangerous commercial products or other threats that do not involve consensus on highly complex situations or deeply polarizing issues.

The Community Notes model itself was conceived by the previous Twitter administration as an additional tool to combat disinformation, not as the only safeguard against falsehoods. The coexistence of professional and crowdsourced fact-checking – being the former more reliable for disinformation on sensitive and complex topics and the latter relatively effective for addressing non-polarizing threats – may offer a balanced solution for building a safer digital space on platforms. Framing them as opposing alternatives rather than complementary tools is a misguided approach. Instead of dismantling one system in favor of the other, a more effective strategy would be to integrate them, leveraging their strengths to counter misinformation more efficiently.

Tommaso Canetta, Coordinator of the EDMO fact-checking activities

Enzo Panizio, Journalist at Pagella Politica/Facta and EDMO

Photo: Anthony Quintano