An analysis of Factnameh, Iranian fact-checking organization based in Canada, member of the International Fact-Checking Network (IFCN)

Since launching Factnameh in 2017 to combat misinformation in Iran, we’ve monitored our fair share of sensitive events: presidential elections (three and counting), national protests, crackdowns, and the ongoing campaigns of disinformation that plague Iranian social media. But nothing prepared us for the scale and speed of misinformation we witnessed during the Iran-Israel conflict in 2024–2025.

This was the first major military conflict where generative AI played a central role in shaping public perception, and it might just be a preview of what’s to come.

From Protests to Propaganda: A Pattern of Misinformation

In past crises — be it natural disasters, elections, or violent crackdowns — misinformation often came in predictable forms: old videos, recycled photos, and miscaptioned images. These are tactics we’ve grown used to spotting. In the absence of real-time information, especially where media access is restricted, people share what they can. It creates an information vacuum that bad actors are always ready to fill.

This was true during the 2022 protests that sparked Iran’s “Woman, Life, Freedom” movement and also during past flare-ups in the region. But the Iran-Israel conflict — specifically, the strikes in June 2025 — represented a turning point.

Tehran in Flames, Truth in Doubt

On June 13, 2025, when reports of multiple explosions in Tehran began to circulate, we at Factnameh were braced for the usual flood of outdated images misattributed to the current event. But something surprising happened: we didn’t find any.

Instead, social media was flooded with authentic, graphic, citizen-generated content. There was no vacuum to fill. The immediacy and intensity of what had happened left little room — or need — for recycled visuals. Ironically, this time the misinformation flowed in reverse: authentic photos were falsely labelled as fake. One particularly harrowing image of a corpse in the rubble, claimed by some to be from Syria, turned out to be real and from Tehran itself.

Within 24 Hours: A New Kind of War Began

Soon after Iran retaliated with missile strikes, a new wave of disinformation emerged, this time using AI-generated videos and images.

The visuals were slick, dramatic, and in many cases, entirely fabricated. AI-generated scenes of a destroyed Tel Aviv downtown, downed F-35 jets, craters in Israeli cities, and protests that never happened quickly flooded Persian, Urdu, Arabic, and even Western social media. What made this moment different was the volume, sophistication, and accessibility of the tools being used.

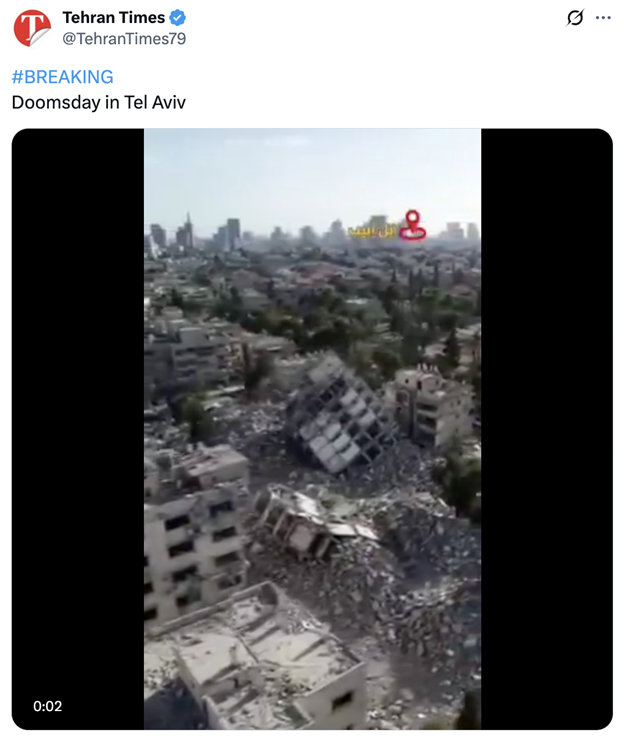

“Doomsday in Tel Aviv”: AI Meets State Propaganda

One of the first viral examples came from the official X account of the Tehran Times, an English-language newspaper affiliated with the Iranian state. The video, captioned “#Breaking Doomsday in Tel Aviv,” showed a birds-eye view of buildings reduced to rubble and a city in ruins. But the video wasn’t real; it was lifted from an Arabic TikTok account that regularly creates AI-generated “fantasy destruction” content.

Even after being debunked by Factnameh and flagged with a Community Note, the post remained online.

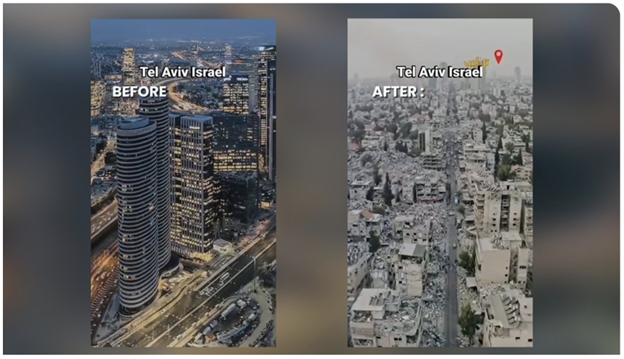

Iranian outlets like Fars News, Nour News, and Shargh soon followed suit, publishing a video compilation called “Tel Aviv Before and After the War with Iran”. Nearly all footage in the video was AI-generated, a digital mirage passed off as news.

The Myth of the Downed F-35s

Perhaps the most enduring false narrative was that Iran had successfully downed multiple Israeli F-35 fighter jets.

Images appeared online showing wreckage, but they didn’t hold up under scrutiny. One photo, widely aired on state TV and Persian-language social media, had glaring issues: a misplaced Star of David, untouched grass around the crash site, unrealistic scale and a glaring engine. Using an AI detection tool, we estimated with 98% certainty that the image was machine-generated.

Another image, even more ridiculous in its proportions, showed a jet half-buried in sand with a crowd of people around it, shared gleefully by pro-Islamic Republic users.

The claim persisted for weeks, repeated by state officials and media, until, finally, on July 10, it was sheepishly retracted on state television. But rather than acknowledging the falsehood, the retreat was framed as a “Zionist psychological operation.”

More Fakes: Missiles, Craters, and Imaginary Protests

The disinformation campaign didn’t stop at fake jets and cities in ruin. Here’s a quick round-up of some of the most egregious examples:

- Press TV published (and quickly deleted) a fake video of Tel Aviv being hit by an Iranian missile, featuring a cartoonish explosion.

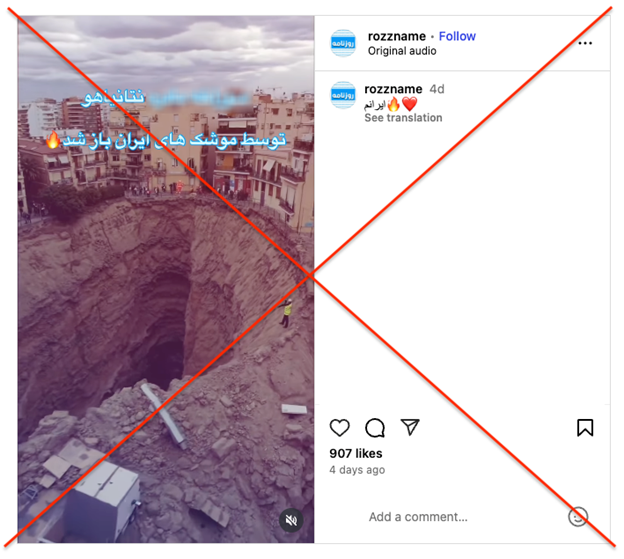

- An AI-generated crater in an unnamed city was shared across Instagram and YouTube as evidence of an Iranian strike on Israel, despite originating from an art account before the war began.

- False rumours spread that Pakistan had sent 750 ballistic missiles to aid Iran. The videos used to support this claim featured AI-generated trucks with missing drivers and people vanishing mid-motion.

- A video supposedly showing Israelis protesting for peace with Hebrew-looking gibberish signs turned out to be entirely AI-generated, most likely created using Google’s Veo tool.

- The other side wasn’t innocent either: a similar AI-generated video emerged showing Iranians cheering “We Love Israel!”. Equally fake, equally absurd.

A Dangerous New Era of Digital Propaganda

This was the first major conflict where AI-generated disinformation operated at scale, and it definitely won’t be the last. The barrier to entry is now nearly nonexistent: anyone with a smartphone and a prompt can create convincing fakes in minutes.

This democratization of deception is both terrifying and fascinating. On one hand, it empowers citizen storytellers. On the other hand, it gives propagandists and malicious actors the tools to muddy the waters like never before.

Even more troubling is the public’s reaction. As AI fakes become more common, trust erodes. People begin to question everything, even real videos and images that contradict their biases. A newly surfaced shocking (and authentic) video of an Israeli rocket hitting in a busy Tehran street, sending cars flying in the air, was dismissed by many online as AI-generated and regime propaganda. What we’re seeing now is a sort of hyper-cynicism, where even truth is suspected of being fiction.

What Can Be Done?

We need to respond, not just with fact-checking, but with education.

Media literacy must be redefined for the AI age. That means teaching people not just how to spot crude fakes, but how to question persuasive, high-quality disinformation. Vulnerable populations, especially older adults and less digitally literate users, need targeted support.

We must also build a wider ecosystem of resilience: partnering with tech companies to improve detection tools and push for clear labeling of AI-generated content, collaborating with libraries and schools to integrate media literacy into curricula, and establishing rapid-response networks of fact-checkers and researchers to contain viral falsehoods before they take hold. Policymakers, too, need expert guidance to draft smart regulation that tackles abuse without limiting innovation.

Sadly, this won’t be the last conflict we see, and the AI technology behind fake images and videos is getting better exponentially. We need to be ready. Because AI wars are here, and they don’t just change what we see. They change what we believe.

Photo: Flickr/llee_wu